Neural Network Tutorials - Herong's Tutorial Examples - v1.22, by Herong Yang

Simple Model in Playground

This section provides a tutorial example on how to build a simple neural network model with Deep Playground using the simplest dataset of the classification problem. The model uses no hidden layer and the linear function as the activation function.

The best way to get familiarized with the Deep Playground is to build a simple neural network model as shown in this tutorial.

1. Go to the Web based version of Deep Playground at https://playground.tensorflow.org.

2. Change the activation to the "Linear" function.

3. Remove all hidden layers. And keep only 2 input features: x1 and x2.

4. Keep "Problem Type" as "Classification".

5. Select the third dataset pattern where two output groups can be separated by a straight line. This represents the simplest learning task from a mathematical point of view. A linear function can be easily constructed to solve this classification problem without using any neural networks.

6. Change "Ratio of training to test data" to 90%. Only 10% of the data is left for testing.

Now we have a simple 1-layer neural network with 2 input nodes with a linear function as the activation function. Mathematically, it can be expressed as:

Matrix format:

y = linear(W · x)

linear(z) = z/6

Expanded format:

y = (W1*x1 + W2*x2)/6

We can verify the above model by looking at the output area, which displays the background in two colors with in intensity changing linearly in one direction. Since the model has not been trained yet, the output is generated with random initial values in the weight matrix, W.

The accuracy of the model can be visually evaluated by checking if all positive samples are in the blue area and all negative samples are in the orange area of the background.

If you are lucky, the initial weight matrix can be good enough to give you an accurate model without any training.

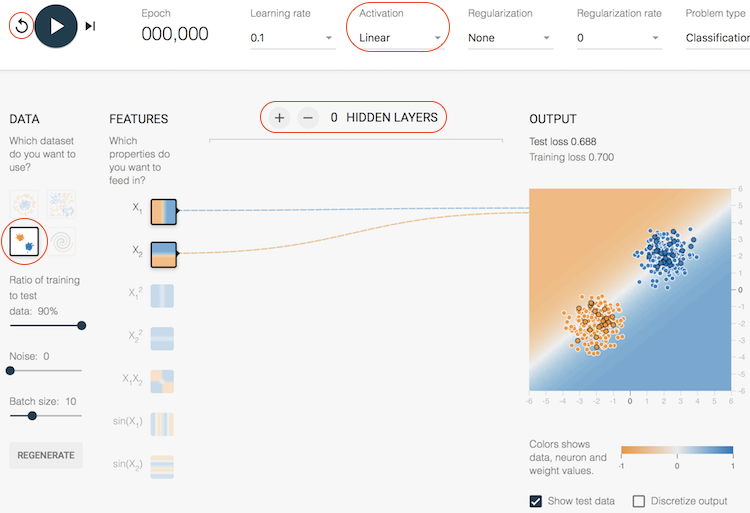

In order to see the impact of the training process, let's click the "reset" icon repeatedly to change the initial weight matrix until you see a pretty poor output. Colors of samples do not match predictions (ground color) at all as shown in the picture below.

By looking at the output, we can guess that they model is very close to the following expression:

Model with a poor initial weight matrix: y = (0.5*x1 + -0.5*x2)/6

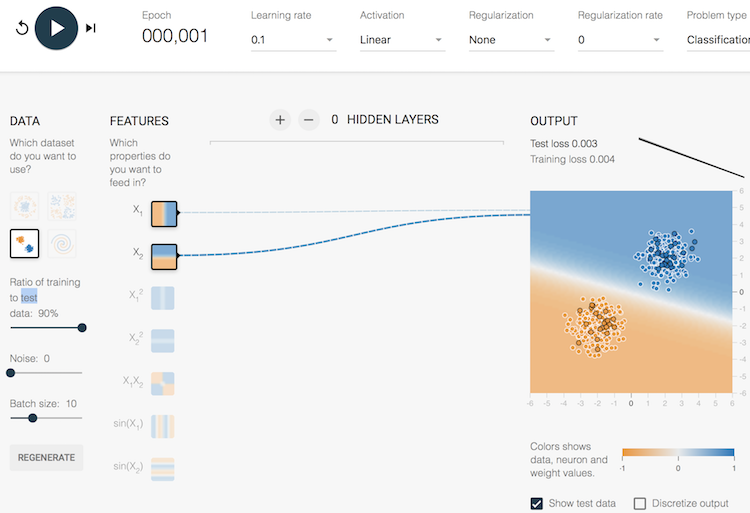

Let's click the "play one epoch" icon in the play control area. The weight matrix will be updated by running 1 round (epoch) of training 10% of the samples at a learning rate of 0.1. The result is pretty good as shown below. This is expected. Mathematically, a 2-dimensional linear problem can be solved by a linear function with only 2 samples.

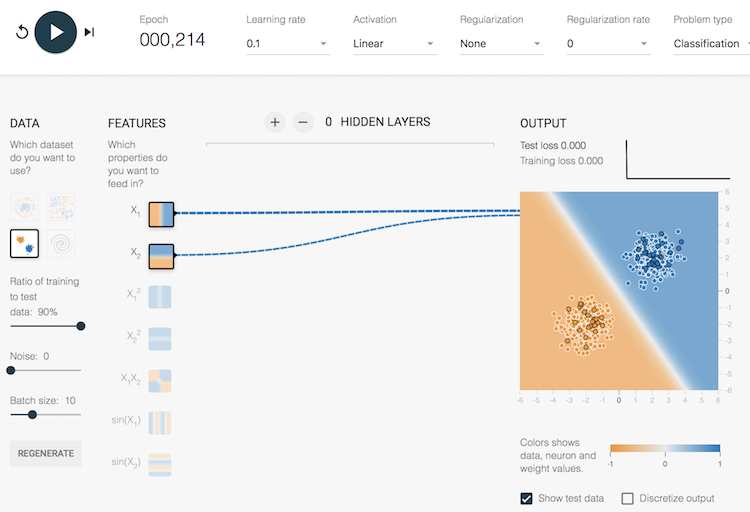

If you click the "play" icon to resume the training with more epochs, the model will converge to the best solution pretty quickly as shown blow.

Look at the output after 214 epochs shown in the above picture, we can guess that the model is very close to the following expression now:

Model with a good weight matrix: y = (0.5*x1 + 0.5*x2)/6

The current weight matrix of the model is also visualized as the color of each link between input and output. So 2 blue links represents 2 positive values in the weight matrix, pretty close to (0.5, 0.5) used in the above expression.

I think we have a good understanding on how to use Deep Playground now by going through this simple model. We will look at impacts of using other input features, other activation functions and hidden layers in next tutorials.

Table of Contents

►Deep Playground for Classical Neural Networks

Impact of Extra Input Features

Impact of Additional Hidden Layers and Neurons

Impact of Neural Network Configuration

Impact of Activation Functions

Building Neural Networks with Python

Simple Example of Neural Networks

TensorFlow - Machine Learning Platform

PyTorch - Machine Learning Platform

CNN (Convolutional Neural Network)

RNN (Recurrent Neural Network)

GAN (Generative Adversarial Network)