Neural Network Tutorials - Herong's Tutorial Examples - v1.22, by Herong Yang

Impact of Additional Hidden Layers and Neurons

This section provides a tutorial example to show the impact of additional hidden layers and additional neurons on a simple neural network model created on Deep Playground.

After looked at the impact of extra input features, let's now look at the impact of extra layers and extra neurons (nodes) in this tutorial.

1. Continue withe previous tutorial of a 1-layer model with linear activation function on the linear classification problem.

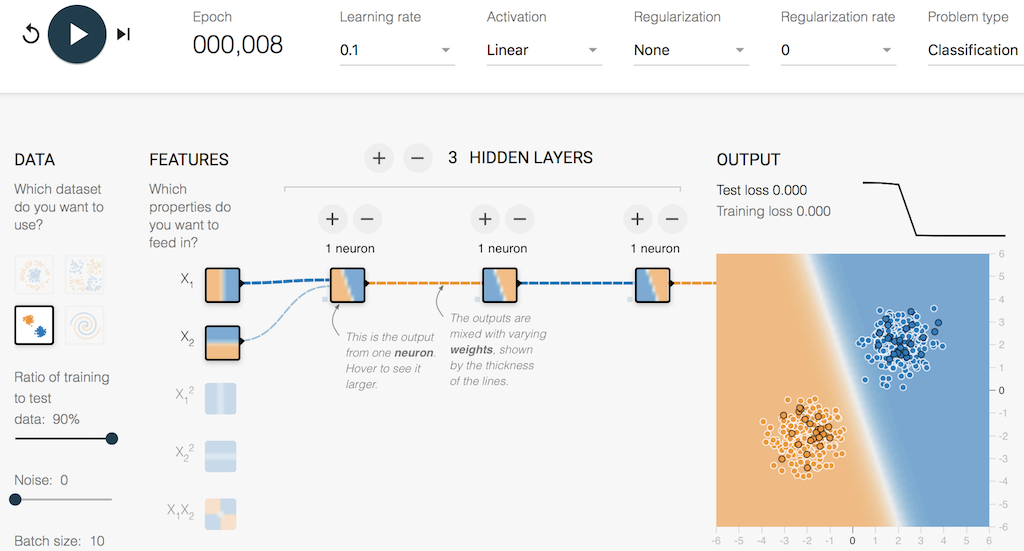

2. Add 3 hidden layers with 1 neuron in each hidden layer.

3. Play with different initial weight matrices. It will take less than 10 epochs to get the stabilized state, when "Training loss" reaches 0.00. One example is shown below. It is interesting to see that the output of the first hidden layer is already a good solution by looking at its color pattern. But the second hidden layer gives a completely opposite solution with a negative weight. The opposite solution is passed to the third hidden layer with a positive weight. It finally reversed back to the correct solution in the output layer with a negative weight.

As you can see, single-neuron hidden layers provides no help the model, since they can only change signs of the output or scale it. So don't any single-neuron hidden layer in a neuron network. It's a waste of resource.

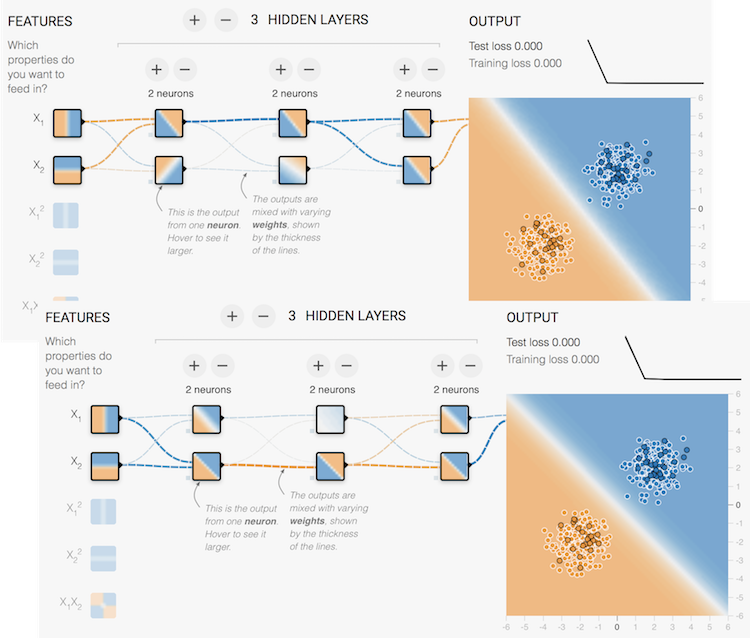

4. Expand all 3 hidden layers with 2 neurons in each hidden layer.

5. Play with different initial weight matrices. It will take less than 10 epochs to get the stabilized state. You will get different stabilized weight matrices with different initial values. Two examples are shown below.

As you can see, additional neurons in the hidden layer create more possible combinations of weight matrices to solve this simple linear problem.

Table of Contents

►Deep Playground for Classical Neural Networks

Impact of Extra Input Features

►Impact of Additional Hidden Layers and Neurons

Impact of Neural Network Configuration

Impact of Activation Functions

Building Neural Networks with Python

Simple Example of Neural Networks

TensorFlow - Machine Learning Platform

PyTorch - Machine Learning Platform

CNN (Convolutional Neural Network)

RNN (Recurrent Neural Network)

GAN (Generative Adversarial Network)