Neural Network Tutorials - Herong's Tutorial Examples - v1.22, by Herong Yang

Impact of Activation Functions

This section provides a tutorial example to demonstrate the impact of activation functions used in a neural network model. The 'ReLU' function seems to be a better activation function than 'Tanh', 'Sigmoid' and 'Linear' for the complex classification problem in Deep Playground.

In previous tutorials, we have been using the ReLU() activation function for the complex classification problem in Deep Playground.

In this tutorial, let's do a comparison of all 4 activation functions that are supported in Deep Playground, ReLU(), Tanh(), Sigmoid() (or Logistic()) and Linear(). They are defined and illustrated below.

1. Reset the model with the complex classification problem using 90% training set, 0.1 learning rate, 2 hidden layers and 6 neurons in each layer.

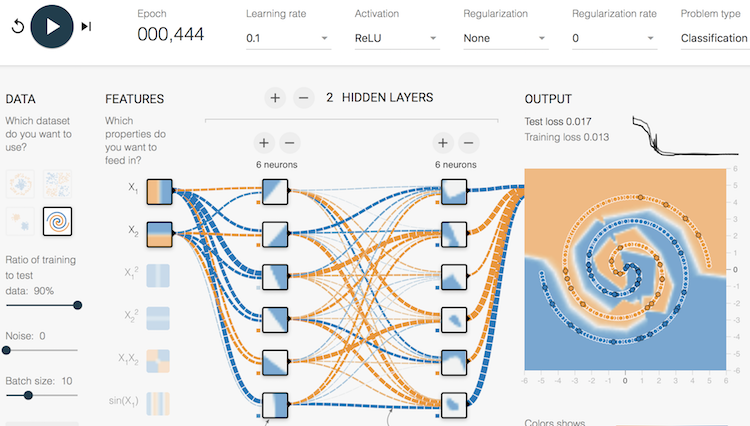

2. Select "ReLU" as the activation function and play the model. It should reach a good solution most of the time, sometimes faster and sometimes slower. The picture below shows a solution reached in about 100 epochs.

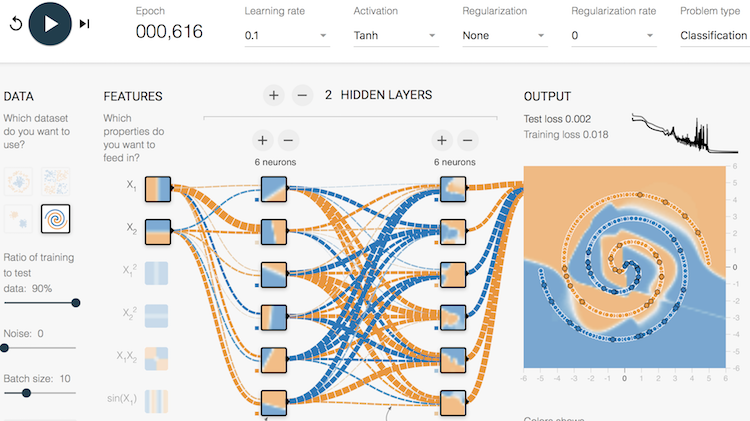

3. Select "Tanh" as the activation function and play it again. It should reach a good solution most of the time with some oscillations. May be a lower learning rate should be used.

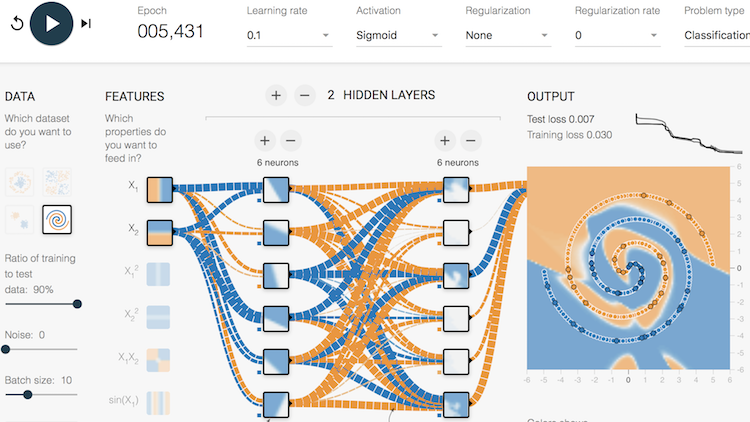

4. Select "Sigmoid" as the activation function and play it again. It should always reach a good solution. But it gets there very slowly, more than 5,000 epochs as shown below.

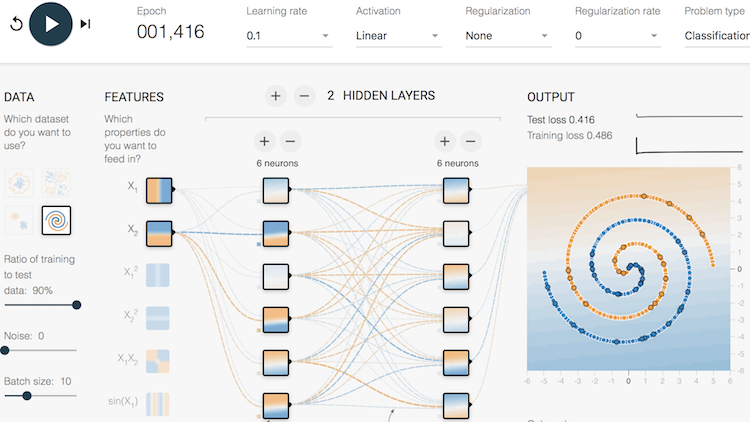

5. Select "Linear" as the activation function and play it again. It fails to reach any solution.

Conclusion, for the complex classification problem, "ReLU", "Tanh" and "Sigmoid" activation functions are all able to reach good solutions. But "Sigmoid" seems to have "smaller" updates than "ReLU" and "Tanh" and takes longer to reach a solution. "Linear" is not able to reach any solution.

Table of Contents

►Deep Playground for Classical Neural Networks

Impact of Extra Input Features

Impact of Additional Hidden Layers and Neurons

Impact of Neural Network Configuration

►Impact of Activation Functions

Building Neural Networks with Python

Simple Example of Neural Networks

TensorFlow - Machine Learning Platform

PyTorch - Machine Learning Platform

CNN (Convolutional Neural Network)

RNN (Recurrent Neural Network)

GAN (Generative Adversarial Network)